|

T A B L E O F C O N T E N T S

S E P T E M B E R / O C T O B E R 2 0 1 6

Volume 22, Number 9/10

DOI: 10.1045/september2016-contents

ISSN: 1082-9873

E D I T O R I A L

Virtuous Cycle

Editorial by Laurence Lannom, Corporation for National Research Initiatives

Current Research on Mining Scientific Publications

Guest Editorial by Drahomira Herrmannova and Petr Knoth, Knowledge Media Institute, The Open University

A R T I C L E S

Rhetorical Classification of Anchor Text for Citation Recommendation

Article by Daniel Duma and Ewan Klein, University of Edinburgh; Maria Liakata and James Ravenscroft, University of Warwick; Amanda Clare, Aberystwyth University

Abstract: Wouldn't it be helpful if your text editor automatically suggested papers that are contextually relevant to your work? We concern ourselves with this task: we desire to recommend contextually relevant citations to the author of a paper. A number of rhetorical annotation schemes for academic articles have been developed over the years, and it has often been suggested that they could find application in Information Retrieval scenarios such as this one. In this paper we investigate the usefulness for this task of CoreSC, a sentence-based, functional, scientific discourse annotation scheme (e.g. Hypothesis, Method, Result, etc.). We specifically apply this to anchor text, that is, the text surrounding a citation, which is an important source of data for building document representations. By annotating each sentence in every document with CoreSC and indexing them separately by sentence class, we aim to build a more useful vector-space representation of documents in our collection. Our results show consistent links between types of citing sentences and types of cited sentences in anchor text, which we argue can indeed be exploited to increase the relevance of recommendations.

Temporal Properties of Recurring In-text References

Article by Iana Atanassova, Centre Tesniere, University of Franche-Comte, France and Marc Bertin, Centre Interuniversitaire de Rercherche sur la Science et la Technologie (CIRST), Université du Québec à Montréal (UQAM)

Abstract: In this paper we study the properties of recurring in-text references in research articles and more specifically their positions in the rhetorical structure of articles and their age with respect to the citing article. We have processed a large scale corpus of approximately 80,000 papers published by PLOS (Public Library of Science). We examine the number and types of recurring in-text references, as well as their age according to positions in the IMRaD structure. (Introduction, Methods, Results, and Discussion). The results show that the age of recurring references varies considerably in all sections and journals. While they are especially dense in the Introduction section, most of them reappear in the beginning of the Results and the Discussion sections. We also observe that the beginning of the Methods and the Results sections share a significant number of recurring references.

The Impact of Academic Mobility on the Quality of Graduate Programs

Article by Thiago H. P. Silva, Alberto H. F. Laender, Clodoveu A. Davis Jr., Ana Paula Couto da Silva and Mirella M. Moro, Universidade Federal de Minas Gerais, Brazil

Abstract: The large amount of publicly available scholarly data today has allowed exploration of new aspects of research collaboration, such as the evolution of scientific communities, the impact of research groups and the social engagement of researchers. In this paper, we discuss the importance of characterizing the trajectories of faculty members in their academic education and their impact on the quality of the graduate programs they are associated with. In that respect, we analyze the mobility of faculty members from top Brazilian Computer Science graduate programs as they progress through their academic education (undergraduate, master's, PhD and post-doctorate). Our findings indicate that the number of faculty members who are educated abroad are an important indicator of the quality of these graduate programs because they tend to publish more often, and in higher quality venues.

Preliminary Study on the Impact of Literature Curation in a Model Organism Database on Article Citation Rates

Article by Tanya Berardini and Leonore Reiser, The Arabidopsis Information Resource; Ron Daniel Jr. and Michael Lauruhn, Elsevier Labs

Abstract: Literature curation by model organism databases (MODs) results in the interconnection of papers, genes, gene functions, and other experimentally supported biological information, and aims to make research data more discoverable and accessible to the research community. That said, there is a paucity of quantitative data about if and how literature curation affects access and reuse of the curated data. One potential measure of data reuse is the citation rate of the article used in curation. If articles and their corresponding data are easier to find, then we might expect that curated articles would exhibit different citation profiles when compared to articles that are not curated. That is, what are the effects of having scholarly articles curated by MODs on their citation rates? To address this question we have been comparing the citation behavior of different groups of articles and asking the following questions: (1) given a collection of 'similar' articles about Arabidopsis, is there a difference in the citation numbers between articles that have been curated in TAIR (The Arabidopsis Information Resource) and ones that have not, (2) for articles annotated in TAIR, is there a difference in the citation behavior before vs. after curation and, (3) is there a difference in citation behavior between Arabidopsis articles added to TAIR's database and those that are not in TAIR? Our data indicate that curated articles do have a different citation profile than non-curated articles that appears to result from increased visibility in TAIR. We believe data of this type could be used to quantify the impact of literature curation on data reuse and may also be useful for MODs and funders seeking incentives for community literature curation. This project is a research partnership between TAIR and Elsevier Labs.

Measuring Scientific Impact Beyond Citation Counts

Article by Robert M. Patton, Christopher G. Stahl and Jack C. Wells, Oak Ridge National Laboratory

Abstract: The measurement of scientific progress remains a significant challenge exasperated by the use of multiple different types of metrics that are often incorrectly used, overused, or even explicitly abused. Several metrics such as h-index or journal impact factor (JIF) are often used as a means to assess whether an author, article, or journal creates an "impact" on science. Unfortunately, external forces can be used to manipulate these metrics thereby diluting the value of their intended, original purpose. This work highlights these issues and the need to more clearly define "impact" as well as emphasize the need for better metrics that leverage full content analysis of publications.

An Analysis of the Microsoft Academic Graph

Article by Drahomira Herrmannova and Petr Knoth, Knowledge Media Institute, The Open University, Milton Keynes, UK

Abstract: In this paper we analyse a new dataset of scholarly publications, the Microsoft Academic Graph (MAG). The MAG is a heterogeneous graph comprised of over 120 million publication entities and related authors, institutions, venues and fields of study. It is also the largest publicly available dataset of citation data. As such, it is an important resource for scholarly communications research. As the dataset is assembled using automatic methods, it is important to understand its strengths and limitations, especially whether there is any noise or bias in the data, before applying it to a particular task. This article studies the characteristics of the dataset and provides a correlation analysis with other publicly available research publication datasets to answer these questions. Our results show that the citation data and publication metadata correlate well with external datasets. The MAG also has very good coverage across different domains with a slight bias towards technical disciplines. On the other hand, there are certain limitations to completeness. Only 30 million papers out of 127 million have some citation data. While these papers have a good mean total citation count that is consistent with expectations, there is some level of noise when extreme values are considered. Other current limitations of MAG are the availability of affiliation information, as only 22 million papers have these data, and the normalisation of institution names.

Scraping Scientific Web Repositories: Challenges and Solutions for Automated Content Extraction

Article by Philipp Meschenmoser, Norman Meuschke, Manuel Hotz and Bela Gipp, Department of Computer and Information Science, University of Konstanz, Germany

Abstract: Aside from improving the visibility and accessibility of scientific publications, many scientific Web repositories also assess researchers' quantitative and qualitative publication performance, e.g., by displaying metrics such as the h-index. These metrics have become important for research institutions and other stakeholders to support impactful decision making processes such as hiring or funding decisions. However, scientific Web repositories typically offer only simple performance metrics and limited analysis options. Moreover, the data and algorithms to compute performance metrics are usually not published. Hence, it is not transparent or verifiable which publications the systems include in the computation and how the systems rank the results. Many researchers are interested in accessing the underlying scientometric raw data to increase the transparency of these systems. In this paper, we discuss the challenges and present strategies to programmatically access such data in scientific Web repositories. We demonstrate the strategies as part of an open source tool (MIT license) that allows research performance comparisons based on Google Scholar data. We would like to emphasize that the scraper included in the tool should only be used if consent was given by the operator of a repository. In our experience, consent is often given if the research goals are clearly explained and the project is of a non-commercial nature.

Quantifying Conceptual Novelty in the Biomedical Literature

Article by Shubhanshu Mishra and Vetle I. Torvik, University of Illinois at Urbana-Champaign

Abstract: We introduce several measures of novelty for a scientific article in MEDLINE based on the temporal profiles of its assigned Medical Subject Headings (MeSH). First, temporal profiles for all MeSH terms (and pairs of MeSH terms) were characterized empirically and modelled as logistic growth curves. Second, a paper's novelty is captured by its youngest MeSH (and pairs of MeSH) as measured in years and volume of prior work. Across all papers in MEDLINE published since 1985, we find that individual concept novelty is rare (2.7% of papers have a MeSH ≤ 3 years old; 1.0% have a MeSH ≤ 20 papers old), while combinatorial novelty is the norm (68% have a pair of MeSH ≤ 3 years old; 90% have a pair of MeSH ≤ 10 papers old). Furthermore, these novelty measures exhibit complex correlations with article impact (as measured by citations received) and authors' professional age.

Capturing Interdisciplinarity in Academic Abstracts

Article by Federico Nanni, Data and Web Science Research Group, University of Mannheim, Germany and International Centre for the History of Universities and Science, University of Bologna, Italy; Laura Dietz, Stefano Faralli, Goran Glavaš and Simone Paolo Ponzetto, Data and Web Science Research Group, University of Mannheim, Germany

Abstract: In this work we investigate the effectiveness of different text mining methods for the task of automated identification of interdisciplinary doctoral dissertations, considering solely the content of their abstracts. In contrast to previous attempts, we frame the interdisciplinarity detection as a two step classification process: we first predict the main discipline of the dissertation using a supervised multi-class classifier and then exploit the distribution of prediction confidences of the first classifier as input for the binary classification of interdisciplinarity. For both supervised classification models we experiment with several different sets of features ranging from standard lexical features such as TF-IDF weighted vectors over topic modelling distributions to latent semantic textual representations known as word embeddings. In contrast to previous findings, our experimental results suggest that interdisciplinarity is better detected when directly using textual features than when inferring from the results of main discipline classification.

N E W S & E V E N T S

In Brief: Short Items of Current Awareness

In the News: Recent Press Releases and Announcements

Clips & Pointers: Documents, Deadlines, Calls for Participation

Meetings, Conferences, Workshops: Calendar of Activities Associated with Digital Libraries Research and Technologies

|

|

F E A T U R E D D I G I T A L

C O L L E C T I O N

NIH Data Sharing Repositories

Immunology Database and Analysis Portal (ImmPort)

Biologic Specimen and Data Repository Information Coordinating Center

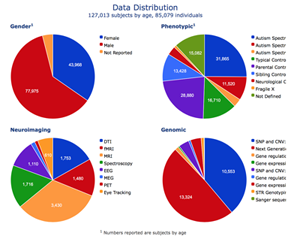

National Database for Autism Research (NDAR)

Database of Genotypes and Phenotypes (dbGaP)

iDASH (Integrating Data for Analysis, Anonymization, and SHaring)

The web site being featured in this issue of D-Lib Magazine is the National Institutes of Health (NIH) Data Sharing Repositories. It is one of several resources provided by the Trans-NIH BioMedical Informatics Coordinating Committee (BMIC), which was established in the Spring of 2007 to improve communication and coordination of issues related to clinical- and bio-informatics at NIH. The Committee provides a forum for sharing information about NIH informatics programs, projects, and plans, including their relationship to activities of other federal agencies and non-government organizations.

The NIH Data Sharing Repositories site lists dozens of NIH-supported data repositories that make data accessible for reuse. Most accept submissions of appropriate data from NIH-funded investigators (and others), but some restrict data submission to only those researchers involved in a specific research network. Also included are resources that aggregate information about biomedical data and information sharing systems. The table can be sorted according by name and by NIH Institute or Center and may be searched using keywords so that you can find repositories more relevant to your data. Links are provided to information about submitting data to and accessing data from the listed repositories. Additional information about the repositories and points-of-contact for further information or inquiries can be found on the websites of the individual repositories.

The National Institutes of Health is a part of the U.S. Department of Health and Human Services and is the nation's medical research agency.

D - L I B E D I T O R I A L S T A F F

Laurence Lannom, Editor-in-Chief

Allison Powell, Associate Editor

Catherine Rey, Managing Editor

Bonita Wilson, Contributing Editor

|