D-Lib Magazine

January/February 2016

Volume 22, Number 1/2

Table of Contents

Desktop Batch Import Workflow for Ingesting Heterogeneous Collections: A Case Study with DSpace 5

Jacob L. Nash

University of New Mexico

jlnash@salud.unm.edu

orcid.org/0000-0001-6992-8622

Jonathan Wheeler

University of New Mexico

jwheel01@unm.edu

orcid.org/0000-0002-7166-3587

DOI: 10.1045/january2016-nash

Printer-friendly Version

Abstract

The release of DSpace 5 has made batch importing of zipped Simple Archive Format (SAF) directories via the online user interface a possibility. This improved functionality supports a repository administrator's ability to perform batch ingest operations by removing the need to utilize the command-line, but staging large collections for ingest can be time consuming. Additionally, the SAF specification does not lend itself to an efficient desktop workflow. We describe a lightweight and easily adopted Python workflow for packaging heterogeneous collections in Simple Archive Format for batch ingest into DSpace.

1 Introduction

A number of researchers have written about the benefits of batch importing as a means to populate an institutional repository (IR). Many IRs have been implemented with a mentality of "If you build it, they will come" yet have not seen widespread adoption. (Foster & Gibbons, 2005) Thus, turning to archival collections, electronic theses and dissertations (ETDs), and other material has made it possible to populate IRs with quality scholarly content. Bruns, et al. (2014) describe the launch of Eastern Illinois' repository, The Keep, and the usefulness of batch importing documents with pre-existing metadata in order to quickly add content. Avercamp & Lee (2009) describe a similar process of batch ingesting ETD's using repurposed ProQuest metadata to populate their repository. Some have also turned to archival or "legacy" scholarship produced at their institutions to digitize and import into their repository (for example, see Shreeves & Teper, 2012).

Batch importing can also be a means of enlarging the capability of an IR to serve as an archival complement to domain or other external repositories. In addition to serving as a full text store-house and federated discovery layer for third party indexing services to which a given domain repository may not be exposed, IRs often provide unique preservation features which justify mirroring collections or items already published by non-archival systems (see, for example, Wheeler & Benedict, 2015). Similar to the relationship between PubMed and PubMed Central, the default Open Access (OA) policies of many IRs further support collection development strategies focused on using the IR as a low-barrier option for meeting funder or other OA publishing requirements. However, given limitations on human resources, these types of collection development activity provide clear use cases for batch ingest procedures.

The inclusion of a web-based, batch import GUI in DSpace versions 5 and above offers repository managers an opportunity to more efficiently capitalize on batch ingest processes in support of collection development. While batch import has been a longstanding DSpace feature, the process was previously executed via a server side command line utility available only to server administrators, and required the content for ingest to likewise be uploaded to the server backend via FTP or other means. Although this prior procedure was not complex or time-intensive, the necessary coordination and server-side activity relegated batch ingest to a periodic activity rather than a desktop workflow capable of supporting a marketable service. In addition to being streamlined and more accessible to most repository managers, the GUI batch import feature only requires admin level privileges over the DSpace application, not the server on which it resides. While the development of utilities for incorporating the full process into the graphical user interface (GUI) is promising (see, for example, Simple Archive Format Packager), for repository managers interested in capitalizing on the upgraded import feature to develop content and promote the IR as a service, we describe a case study in which a simple, Python based workflow was used to ingest a complete collection via the new GUI.

2 Native Health Database

The Native Health Database (NHD) was developed in-house at the Health Sciences Library and Informatics Center at UNM. It is a bibliographic database that contains "abstracts of health-related articles, reports, surveys, and other resource documents pertaining to the health and health care of American Indians, Alaska Natives, and Canadian First Nations. The database provides information for the benefit, use, and education of organizations and individuals with an interest in health-related issues, programs, and initiatives regarding North American indigenous peoples". (See About the NHD.) Many of the records in the NHD are government documents and other copyright-clear items that were digitized prior to 2015 in support of a previous initiative. Designed as a discovery tool, the NHD provides metadata indexing and discovery as well as link-out capabilities to full text, but in itself did not provide storage or access to the full text prior to the close of the initial project. Scanned documents were therefore made accessible via a collection in LoboVault, a DSpace instance and the institutional repository of the University of New Mexico. Content available in LoboVault included NHD accession numbers as identifiers, while NHD records were updated to include full content links via corresponding DSpace generated handles. As of April, 2015, roughly 100 items had been manually uploaded to LoboVault. Due to available human resources, timely completion of the project and upload of the remaining (roughly 150) items necessitated a batch ingest procedure.

3 Pre-processing and Quality Control

The content model of the NHD (or other source collections) is relevant to the SAF specification and must be accounted for prior to packaging. Minimally, repository managers need to determine ahead of time how existing metadata will be mapped to the Dublin Core (DC) application schema employed by DSpace. Additionally, content files need to be organized and quality assured prior to packaging. The process described here does not require that metadata and content files be stored together.

Development of the metadata model benefitted from previous work with the collection, in particular a metadata enrichment project on the existing NHD items in LoboVault that was completed in fall 2014. Prior to then, minimal title and author metadata had been provided during manual submission of NHD items into LoboVault. To capitalize on the full potential of the IR as an archival mirror of the production database, the authors completed an HTML harvest of existing NHD metadata, mapped the metadata to items that had been uploaded using the accession numbers described above, and transformed metadata fields within the HTML to a CSV file which was imported into LoboVault using the existing DSpace metadata import feature. While the overall process differed in important aspects from the ingest workflow which followed, the experience yielded insight into the application of the Dublin Core metadata schema within DSpace. In particular, there were cases in which the harvested HTML metadata included multiple fields, such as dates and identifiers, which mapped to a single DC field. Resolving such conflicts variously required the concatenation of some fields and the removal of others, and thus the requirements for normalizing and cleaning up the metadata for new items were established well ahead of the ingest project.

Additionally, it became clear that the harvested HTML, regardless of the quality of the contained metadata, required too much preprocessing to be a useful resource within the SAF packaging process. To ingest the remaining set of individual items (n=163), we therefore contacted the NHD administrator to acquire a SQL export of all the NHD metadata. The received metadata were imported into Excel to address the field mapping and concatenation issues described above, and the final set of normalized metadata was exported to CSV per the requirements of our Python SAF packaging workflow.

The content files themselves, which are converted and referred to as bitstreams within DSpace, were likewise subject to quality control processes ahead of packaging. Some consistencies were in place from the original digitization initiative: All of the files were in PDF format, and each one was named using a locally developed accession number. Beyond that, normalization of one-to-many associations was required as single items often contained multiple associated bitstreams (404 bitstreams to 163 items). Lengthy or multi-part reports cataloged within the NHD were often separated into multiple files, and beyond the base accession number a variety of notations were in place to associate related files (for example "pt2" or "1 of 2"). Many of the files we had were duplicates, and filenames also frequently included spaces. Some of these issues, such as deduplication, were quickly resolved using additional Python scripts, while others were resolved manually. Elimination of special characters and spaces from filenames was incorporated into the SAF packaging workflow.

4 Packaging the Collection

Because the steps required to normalize, deduplicate or otherwise modify the content staged for ingest will vary in collection-specific ways, the process described below includes the assumption that all such quality control processes have been completed beforehand and that the bitstreams and metadata have been standardized with regard to content and format.

We developed a Python script to process all the items, their associated metadata, and create an ingest package per the specifications of the DSpace Simple Archive Format. Similar to the Bagit archiving tool developed by the Library of Congress, ingest packages in Simple Archive Format consist of a set of per item directories compressed within a single zip file. Each item directory contains an XML file which conforms to the DSpace Dublin Core application profile (dublin_core.xml), a manifest of bitstreams and their types (contents), and the content files or bitstreams themselves. Besides Dublin Core, additional metadata schema can be incorporated as described in the referenced documentation.

The code is available on LoboGit, a Git service sponsored by the UNM Libraries, and has been released under a Creative Commons Attribution 4.0 (CC BY 4.0) license. While the procedure described here was executed using common Python shells including IDLE and an Emacs interpreter, for future versions the authors are investigating implementation as an iPython notebook offering a somewhat more intuitive GUI based approach with improved documentation features.

Broadly, the script processes a directory of bitstreams, crosswalks metadata into DSpace Dublin Core XML, writes a contents file for each item and compiles everything into a single directory which in itself is an ingest package meeting the SAF specification. As noted above, the bitstreams and associated metadata do not need to be stored within a single directory, and a unique directory or location for the completed, ingest-ready packages may also be specified. This behavior is similar to the batch ingest workflow within the CONTENTdm desktop client, for example, and supports the common use case in which metadata is stored on a local workstation whereas bitstreams reside on shared, networked storage and may additionally be too large to conveniently move or copy ahead of packaging. Consequently, the immediate parameters requested by the script include the destination directory for the finished packages and the top level directory containing the content files or bitstreams (which may reside in subdirectories).

Based on prior experience with packaging sets of items for batch ingest, the procedure is designed to accommodate two types of metadata: structured metadata in CSV format, which can be easily exported from an Excel or other spreadsheet, and, in cases where little or no metadata has been provided, file information can be read and converted to date and title metadata. A path to a metadata file is requested only in cases where CSV is selected as the existing metadata format. In either case, once a metadata format has been selected, users have the option to specify global metadata to be applied to all items. Currently, the process asks for Dublin Core element names and qualifiers, and so requires some knowledge of the DSpace Dublin Core schema. Representation of the schema for simplified field mapping is planned for future versions.

Of necessity, the process for packaging items with file level information is simple and requires little interaction from the user. Once file information is selected as the source for "existing" metadata, no further input is requested. Filenames are used to generate item level package directory names and, barring errors, the process runs to completion.

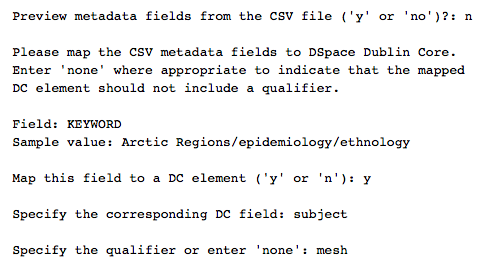

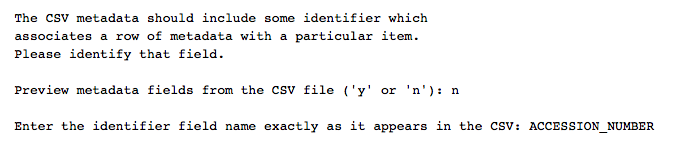

The more interactive process for packaging CSV metadata first requests users to map the fields in the source metadata to Dublin Core. An option is provided to preview the CSV field headings beforehand, and sample values are generated as the script iterates through the set of fields. In addition to helping disambiguate usage in cases where two or more fields may be indistinguishable by name, printing sample field values assists with data typing (for example, determining whether dates have been provided per the ISO 8601 format) and with determining whether the metadata maps to a simple or qualified Dublin Core type. Here again, some knowledge of the specific application schema used by DSpace, in addition to any local customizations, is necessary as both the base element and an optional qualifier are requested (Figure 1). As a final parameter, users must specify a field corresponding to bitstream filenames in order to associate those files with their respective metadata (Figure 2).

Figure 1: A sampling from the interactive scrip in Canopy, a python environment. In this example, the NHD KEYWORD field is being mapped to dc.subject.mesh.

Figure 2: After processing all the metadata fields in the CSV file, the script asks the user which field to use to associate metadata with items.

The remainder of the packaging process, which runs without user interaction, first makes and copies the content files to a corresponding item level package directory. Once copied, content files are renamed to remove special characters and whitespaces before the manifest (contents) is generated by printing a directory listing to file and appending the appropriate DSpace "bundle" type. Finally, the global and CSV mapped metadata, where applicable, are parsed as XML and saved as the item's required dublin_core.xml file.

On successful completion, the script will return three error files for errors related to XML (metadata) errors, bitstreams, or other nonspecific problems. Metadata errors refer to missing or incorrectly defined fields, and bitstream errors reference item filenames included in the metadata file for which there is no corresponding bitstream in the item's package directory. After processing everything, identified errors can be corrected manually by adding a missing bitstream to its package directory or copying the missing information into the specified XML metadata file. More systemic errors, such as an incorrect use of metadata fields across the entire collection, are best fixed by resolving the error in the source file(s) and re-running the script. Because the source metadata and content files are not altered by the script, redoing the process is a simple matter of deleting the previous output and starting over.

Because the GUI ingest requires items to be uploaded using a compressed archive format, a future version of the script may include an optional function to copy the staged items into one or more zip files. Such a feature has not been implemented at this time for two reasons (not counting the relative simplicity of manually zipping directories in the first place). First, the acceptable size of the zip file and the sizes and number of individual items that can be ingested at one time will vary per DSpace instance depending on the specific configuration and available resources. Second, it may be necessary to resolve errors or perform additional quality control procedures ahead of import. Taking these further processes into account, ad-hoc compression does not provide any clear, immediate advantages.

5 Results

To process the NHD collection, the script generated 163 Simple Archive Format packages in subdirectories containing a total of 404 bitstreams, returning zero errors. We performed manual checks of random subdirectories for agreement between metadata and bitstreams to ensure that everything was paired correctly. During this manual check, we discovered one duplicate item that was already in LoboVault, and subsequently deleted it from the group of items staged for ingest. After the manual check, we zipped and uploaded the items via the batch ingest GUI. The upload returned no errors, and a manual inspection of a set of ingested items revealed no previously uncaught mistakes.

6 Discussion

Batch importing has proven to be a very useful feature for populating repositories like LoboVault and offers much promise as a scholarly communications and archiving service. The ability to ingest many items at once has obvious benefits, such as saving time and repository staff overhead, making items available quickly, and, assuming quality control has been applied to items pre-ingest, it can reduce the human error which can occur with repeated data entry, thus ensuring metadata quality and accuracy. The addition of global metadata — values which apply to every item within a collection or subset of a collection — is simple and incorporated as part of the metadata mapping process.

Walsh (2010) has described a batch loading workflow that utilized Perl scripts for item and metadata processing in two case studies with The Knowledge Bank at Ohio State University. Likewise, Silvis (2010) has outlined a process for batch ingesting items into DSpace using Java and Perl scripts on his blog. Both of these processes describe useful automated means of preparing collections and batch loading them via the command-line tool that was the only available option for batch loads prior to the DSpace 5 release. Like both of these methodologies, our method entails packaging bitstreams with DSpace XML files and the generation of a contents file that DSpace needs within each subdirectory, but capitalizes on the new batch import GUI by mapping SAF requirements to a desktop workflow.

In developing this workflow, we used Python because it is not only easy to learn, but is also a well documented technology with a large and active community. As an interpreted language, a development environment for Python can be set up quickly, and for many purposes is as easy as installing precompiled binaries available from the Python website, Enthought's Canopy, Contunuum Analytic's Anaconda, etc. We anticipate that librarians and repository administrators with an introductory set of coding skills and knowledge of the DSpace Simple Archive Format will find using the script to be fairly intuitive.

We reiterate that a generalized procedure such as this script represents requires that normalization of the content and metadata be done beforehand. In the case of the NHD ingest, we performed some metadata editing in Microsoft Excel prior to running the script. For example, we removed invalid XML characters and corrected a few spelling mistakes. Other important considerations were ensuring that all appropriate files were accessible within a specific parent directory, and a conceptual mapping of NHD metadata terms into DSpace Dublin Core was likewise completed as a pre-process. The script has a built in functionality to allow the user to view the CSV field headers and sample values to be sure the correct field name is being mapped, but it is still useful to get an idea beforehand to avoid mistakes.

Finally, batch ingestion of complex, heterogenous collections is understood to be an iterative process. When first using the batch import feature, and perhaps for each subsequent import as well, the librarian or repository administrator will find it useful to experimentally import and review records on a DSpace development server, if one is available. Alternatively, because DSpace is free and open source software, it is possible with minor configuration changes to install it as a standalone desktop application within Windows and Linux operating systems (and conceivably Mac as well). Often, small mistakes, or even large errors, are present in an SAF package due to human error or systemic mistakes made early in the process. It is quite handy to have sandboxes in which to experiment and make mistakes so the production instance of DSpace remains clean and error free. It is also useful to be able to view the records in development as a final check before the batch import into production. Nonetheless, as an outcome of the NHD ingest, together with additional collections that have since been ingested using the same process, the experience of the University of New Mexico Libraries has been a demonstrated increase in the efficiency and consistency of collection processing.

7 Conclusion

We have described a desktop workflow for batch import that may be used to process large, heterogeneous collections for ingest into DSpace. This workflow had a few initial overhead requirements: developing the script required expertise and time on the part of one of the authors; the batch import process itself required about an hour; and automation still requires human oversight, and sometimes manual error resolution, ahead of the final ingest. The process overall saved us the time and the tedium of archiving all the items one-by-one, and has added value to a bibliographic database that is a resource for American Indian/Alaskan Native health research communities. We are further able to use this same workflow to support and market repository services to other stakeholders.

Acknowledgements

We would like to thank Patricia Bradley and I-Ching Bowman for their assistance with the Native Health Database, and UNM Library IT for their support.

References

| [1] |

Averkamp, S., & Lee, J. (2009). Repurposing ProQuest Metadata for Batch Ingesting ETDs into an Institutional Repository. The Code4Lib Journal, (7). |

| [2] |

Bruns, T. A., Knight-Davis, S., Corrigan, E. K., & Brantley, S. (2014). It Takes a Library: Growing a Robust Institutional Repository in Two Years. College & Undergraduate Libraries, 21(3-4), 244-262. http://doi.org/10.1080/10691316.2014.904207 |

| [3] |

Foster, N. F., & Gibbons, S. (2005). Understanding Faculty to Improve Content Recruitment for Institutional Repositories. D-Lib Magazine, 11(01). http://doi.org/10.1045/january2005-foster |

| [4] |

Shreeves, S. L., & Teper, T. H. (2012). Looking backwards Asserting control over historic dissertations. College & Research Libraries News, 73(9), 532-535. |

| [5] |

Silvis, J. (2010, April). Batch Ingest into DSPACE based on EXCEL (Blog). |

| [6] |

Walsh, M. P. (2010). Batch Loading Collections into DSpace: Using Perl Scripts for Automation and Quality Control. Information Technology and Libraries, 29(3), 117-127. http://doi.org/10.6017/ital.v29i3.3137 |

| [7] |

Wheeler, Jonathan, & Karl Benedict. "Functional Requirements Specification for Archival Asset Management: Identification and Integration of Essential Properties of Services-Oriented Architecture Products." Journal of Map & Geography Libraries 11, no. 2 (May 4, 2015): 155-79. http://doi.org/10.1080/15420353.2015.1035474 |

About the Authors

|

Jacob L. Nash is a Resource Management Librarian in the Resources, Archives, and Discovery unit in the Health Sciences Library and Informatics Center at the University of New Mexico. He is responsible for journal selection and deselection, monitoring print and electronic resource usage, and negotiating licenses with major vendors. In addition, he consults with researchers, educators, and students on issues related to copyright and publishing.

|

|

Jonathan Wheeler is a Data Curation Librarian with the University of New Mexico's College of University Libraries and Learning Sciences. His role in the Libraries' Data Services initiatives includes the development of research data ingest, packaging and archiving work flows. His research interests include the requirements and usability of sustainable architectures for long term data preservation and the disposition of research data in response to funding requirements.

|