|

T A B L E O F C O N T E N T S

N O V E M B E R / D E C E M B E R 2 0 1 4

Volume 20, Number 11/12

10.1045/november2014-contents

ISSN: 1082-9873

E D I T O R I A L S

Progress

Editorial by Laurence Lannom, Corporation for National Research Initiatives

New Opportunities, Methods and Tools for Mining Scientific Publications

Guest Editorial by Petr Knoth, Drahomira Herrmannova, Lucas Anastasiou and Zdenek Zdrahal, Knowledge Media Institute, The Open University, UK; Kris Jack, Mendeley, Ltd., UK; Nuno Freire, The European Library, The Netherlands and Stelios Piperdis, Athena Research Center, Greece

A R T I C L E S

The Architecture and Datasets of Docear's Research Paper Recommender System

Article by Joeran Beel and Stefan Langer, Docear, Magdeburg, Germany; Bela Gipp, University of California Berkeley, USA & National Institute of Informatics, Tokyo, Japan; Andreas Nürnberger, Otto-von-Guericke University, Magdeburg, Germany

Abstract: In the past few years, we have developed a research paper recommender system for our reference management software Docear. In this paper, we introduce the architecture of the recommender system and four datasets. The architecture comprises of multiple components, e.g. for crawling PDFs, generating user models, and calculating content-based recommendations. It supports researchers and developers in building their own research paper recommender systems, and is, to the best of our knowledge, the most comprehensive architecture that has been released in this field. The four datasets contain metadata of 9.4 million academic articles, including 1.8 million articles publicly available on the Web; the articles' citation network; anonymized information on 8,059 Docear users; information about the users' 52,202 mind-maps and personal libraries; and details on the 308,146 recommendations that the recommender system delivered. The datasets are a unique source of information to enable, for instance, research on collaborative filtering, content-based filtering, and the use of reference-management and mind-mapping software.

The Social, Political and Legal Aspects of Text and Data Mining (TDM)

Article by Michelle Brook, ContentMine; Peter Murray-Rust, University of Cambridge; Charles Oppenheim, City, Northampton and Robert Gordon Universities

Abstract: The ideas of textual or data mining (TDM) and subsequent analysis go back hundreds if not thousands of years. Originally carried out manually, textual and data analysis has long been a tool which has enabled new insights to be drawn from text corpora. However, for the potential benefits of TDM to be unlocked, a number of non-technological barriers need to be overcome. These include legal uncertainty resulting from complicated copyright, database rights and licensing, the fact that some publishers are not currently embracing the opportunities TDM offers the academic community, and a lack of awareness of TDM among many academics, alongside a skills gap.

Efficient Blocking Method for a Large Scale Citation Matching

Article by Mateusz Fedoryszak and Łukasz Bolikowski, University of Warsaw, Poland

Abstract: Most commonly the first part of record deduplication is blocking. During this phase, roughly similar entities are grouped into blocks where more exact clustering is performed. We present a blocking method for citation matching based on hash functions. A blocking workflow implemented in Apache Hadoop is outlined. A few hash functions are proposed and compared with a particular concern about feasibility of their usage with big data. The possibility of combining various hash functions is investigated. Finally, some technical details related to full citation matching workflow implementation are revealed.

Discovering and Visualizing Interdisciplinary Content Classes in Scientific Publications

Article by Theodoros Giannakopoulos, Ioannis Foufoulas, Eleftherios Stamatogiannakis, Harry Dimitropoulos, Natalia Manola and Yannis Ioannidis, University of Athens, Greece

Abstract: Text visualization is a rather important task related to scientific corpora, since it provides a way of representing these corpora in terms of content, leading to reinforcement of human cognition compared to abstract and unstructured text. In this paper, we focus on visualizing funding-specific scientific corpora in a supervised context and discovering interclass similarities which indicate the existence of inter-disciplinary research. This is achieved through training a supervised classification — visualization model based on the arXiv classification system. In addition, a funding mining submodule is used which identifies documents of particular funding schemes. This is conducted, in order to generate corpora of scientific publications that share a common funding scheme (e.g. FP7-ICT). These categorized sets of documents are fed as input to the visualization model in order to generate content representations and to discover highly correlated content classes. This procedure can provide a high level monitoring which is important for research funders and governments in order to be able to quickly respond to new developments and trends.

Annota: Towards Enriching Scientific Publications with Semantics and User Annotations

Article by Michal Holub, Robert Moro, Jakub Sevcech, Martin Liptak and Maria Bielikova, Slovak University of Technology in Bratislava, Slovakia

Abstract: Digital libraries provide an access to a vast amount of valuable information, mostly in the form of scientific publications. The large volume of documents poses challenges regarding their effective organization, search and means of accessing them. In this paper we present Annota — a collaborative tool enabling the researchers to annotate and organize the scientific publications on the Web and to share them with their colleagues. Annota is also a research platform for evaluation of the methods dealing with automatic organization of the collections of the documents, aiding the users with navigation in large information spaces, as well as the methods of intelligent search for relevant entities based on the content of the information artifacts, user activity and corresponding metadata. We describe the available infrastructure together with examples of the research methods we have realized.

Experiments on Rating Conferences with CORE and DBLP

Article by Irvan Jahja, Suhendry Effendy and Roland H. C. Yap, National University of Singapore

Abstract: Conferences are the lifeblood of research in computer science and they vary in reputation and perceived quality. One guidance for conferences is conference ratings which attempt to classify conferences by some quality measures. In this paper, we present a preliminary investigation on the possibility of systematic unbiased and automatic conference rating algorithms using random walks which give relatedness score for a conference relative to a reference conference (pivot). Our experiments show that just using a simple algorithm on the DBLP bibliographic database, it is possible to get ratings which correlate well with the CORE and CCF conference ratings.

A Comparison of Two Unsupervised Table Recognition Methods from Digital Scientific Articles

Article by Stefan Klampfl, Know-Center GmbH, Graz, Austria; Kris Jack, Mendeley Ltd., London, UK and Roman Kern, Knowledge Technologies Institute, Graz University of Technology, Graz, Austria

Abstract: In digital scientific articles tables are a common form of presenting information in a structured way. However, the large variability of table layouts and the lack of structural information in digital document formats pose significant challenges for information retrieval and related tasks. In this paper we present two table recognition methods based on unsupervised learning techniques and heuristics which automatically detect both the location and the structure of tables within a article stored as PDF. For both algorithms the table region detection first identifies the bounding boxes of individual tables from a set of labelled text blocks. In the second step, two different tabular structure detection methods extract a rectangular grid of table cells from the set of words contained in these table regions. We evaluate each stage of the algorithms separately and compare performance values on two data sets from different domains. We find that the table recognition performance is in line with state-of-the-art commercial systems and generalises to the non-scientific domain.

Towards Semantometrics: A New Semantic Similarity Based Measure for Assessing a Research Publication's Contribution

Article by Petr Knoth and Drahomira Herrmannova, KMi, The Open University

Abstract: We propose Semantometrics, a new class of metrics for evaluating research. As opposed to existing Bibliometrics,Webometrics, Altmetrics, etc., Semantometrics are not based on measuring the number of interactions in the scholarly communication network, but build on the premise that full-text is needed to assess the value of a publication. This paper presents the first Semantometric measure, which estimates the research contribution. We measure semantic similarity of publications connected in a citation network and use a simple formula to assess their contribution. We carry out a pilot study in which we test our approach on a small dataset and discuss the challenges in carrying out the analysis on existing citation datasets. The results suggest that semantic similarity measures can be utilised to provide meaningful information about the contribution of research papers that is not captured by traditional impact measures based purely on citations.

Extracting Textual Descriptions of Mathematical Expressions in Scientific Papers

Article by Giovanni Yoko Kristianto, The University of Tokyo, Tokyo, Japan; Goran Topić, National Institute of Informatics, Tokyo, Japan and Akiko Aizawa, The University of Tokyo and National Institute of Informatics, Tokyo, Japan

Abstract: Mathematical concepts and formulations play a fundamental role in many scientific domains. As such, the use of mathematical expressions represents a promising method of interlinking scientific papers. The purpose of this study is to provide guidelines for annotating and detecting natural language descriptions of mathematical expressions, enabling the semantic enrichment of mathematical information in scientific papers. Under the proposed approach, we first manually annotate descriptions of mathematical expressions and assess the coverage of several types of textual span: fixed context window, apposition, minimal noun phrases, and noun phrases. We then developed a method for automatic description extraction, whereby the problem was formulated as a binary classification by pairing each mathematical expression with its description candidates and classifying the pairs as correct or incorrect. Support vector machines (SVMs) with several different features were developed and evaluated for the classification task. Experimental results showed that an SVM model that uses all noun phrases delivers the best performance, achieving an F1-score of 62.25% against the 41.47% of the baseline (nearest noun) method.

Towards a Marketplace for the Scientific Community: Accessing Knowledge from the Computer Science Domain

Article by Mark Kröll, Stefan Klampfl and Roman Kern, Know-Center GmbH, Graz, Austria

Abstract: As scientific output is constantly growing, it is getting more and more important to keep track not only for researchers but also for other scientific stakeholders such as funding agencies or research companies. Each stakeholder values different types of information. A funding agency, for instance, might be rather interested in the number of publications funded by their grants. However, information extraction approaches tend to be rather researcher-centric indicated, for example, by the type of named entities to be recognized. In this paper we account for additional perspectives by proposing an ontological description of one scientific domain — the computer science domain. We accordingly annotated a set of 22 computer science papers by hand and make this data set publicly available. In addition, we started to apply methods to automatically extract instances and report preliminary results. Taking various stakeholders' interests into account as well as automating the mining process represent prerequisites for our vision of a "Marketplace for the Scientific Community" where stakeholders can exchange not only information but also search concepts or annotated data.

AMI-diagram: Mining Facts from Images

Article by Peter Murray-Rust, University of Cambridge, UK; Richard Smith-Unna, University of Cambridge, UK; Ross Mounce, University of Bath, UK

Abstract: AMI-Diagram is a tool for mining facts from diagrams, and converting the graphics primitives into XML. Targets include X-Y plots, barcharts, chemical structure diagrams and phylogenetic trees. Part of the ContentMine framework for automatically extracting science from the published literature, AMI can ingest born-digital diagrams either as latent vectors, pixel diagrams or scanned documents. For high-quality/resolution diagrams the process is automatic; command line parameters can be used for noisy or complex diagrams. This article provides on overview of the tool which is currently being deployed to alpha-testers, especially in chemistry and phylogenetics.

The ContentMine Scraping Stack: Literature-scale Content Mining with Community-maintained Collections of Declarative Scrapers

Article by Richard Smith-Unna and Peter Murray-Rust, University of Cambridge, UK

Abstract: Successfully mining scholarly literature at scale is inhibited by technical and political barriers that have been only partially addressed by publishers' application programming interfaces (APIs). Many of those APIs have restrictions that inhibit data mining at scale, and while only some publishers actually provide APIs, almost all publishers make their content available on the web. Current web technologies should make it possible to harvest and mine the scholarly literature regardless of the source of publication, and without using specialised programmatic interfaces controlled by each publisher. Here we describe the tools developed to address this challenge as part of the ContentMine project.

GROTOAP2 — The Methodology of Creating a Large Ground Truth Dataset of Scientific Articles

Article by Dominika Tkaczyk, Pawel Szostek and Łukasz Bolikowski, Centre for Open Science, Interdisciplinary Centre for Mathematical and Computational Modelling, University of Warsaw, Poland

Abstract: Scientific literature analysis improves knowledge propagation and plays a key role in understanding and assessment of scholarly communication in scientific world. In recent years many tools and services for analysing the content of scientific articles have been developed. One of the most important tasks in this research area is understanding the roles of different parts of the document. It is impossible to build effective solutions for problems related to document fragments classification and evaluate their performance without a reliable test set, that contains both input documents and the expected results of classification. In this paper we present GROTOAP2 — a large dataset of ground truth files containing labelled fragments of scientific articles in PDF format, useful for training and evaluation of document content analysis-related solutions. GROTOAP2 was successfully used for training CERMINE — our system for extracting metadata and content from scientific articles. The dataset is based on articles from PubMed Central Open Access Subset. GROTOAP2 is published under Open Access license. The semi-automatic method used to construct GROTOAP2 is scalable and can be adjusted for building large datasets from other data sources. The article presents the content of GROTOAP2, describes the entire creation process and reports the evaluation methodology and results.

A Keyquery-Based Classification System for CORE

Article by Michael Völske, Tim Gollub, Matthias Hagen and Benno Stein, Bauhaus-Universität Weimar, Weimar, Germany

Abstract: We apply keyquery-based taxonomy composition to compute a classification system for the CORE dataset, a shared crawl of about 850,000 scientific papers. Keyquery-based taxonomy composition can be understood as a two-phase hierarchical document clustering technique that utilizes search queries as cluster labels: In a first phase, the document collection is indexed by a reference search engine, and the documents are tagged with the search queries they are relevant—for their so-called keyqueries. In a second phase, a hierarchical clustering is formed from the keyqueries within an iterative process. We use the explicit topic model ESA as document retrieval model in order to index the CORE dataset in the reference search engine. Under the ESA retrieval model, documents are represented as vectors of similarities to Wikipedia articles; a methodology proven to be advantageous for text categorization tasks. Our paper presents the generated taxonomy and reports on quantitative properties such as document coverage and processing requirements.

C O N F E R E N C E R E P O R T

Report on the Research Data Alliance (RDA) 4th Plenary Meeting

Conference Report by Yolanda Meleco, Research Data Alliance/U.S.

Abstract: The Research Data Alliance's (RDA) 4th Plenary Meeting was held 22-24 September 2014 in Amsterdam, Netherlands. Funded by the European Commission, the United States National Science Foundation and the Australian National Data Service, and launched in March 2013, RDA's membership has increased in size to more than 2,300 members in 96 countries in less than two years. With a mission to build the social, organizational and technical infrastructure needed to reduce barriers to data sharing and accelerate data driven innovation worldwide, RDA is demonstrating success through its rapid growth and the release of its first outputs by four of its Working Groups during the Meeting. More than 550 individuals attended the meeting representing numerous disciplines and areas of interest, including data management, astronomy, education, health sciences and geology. This report highlights accomplishments announced by working group members during the event, ongoing initiatives of the RDA and opportunities identified for further advancing the mission of the RDA in the near future.

N E W S & E V E N T S

In Brief: Short Items of Current Awareness

In the News: Recent Press Releases and Announcements

Clips & Pointers: Documents, Deadlines, Calls for Participation

Meetings, Conferences, Workshops: Calendar of Activities Associated with Digital Libraries Research and Technologies

|

|

F E A T U R E D D I G I T A L

C O L L E C T I O N

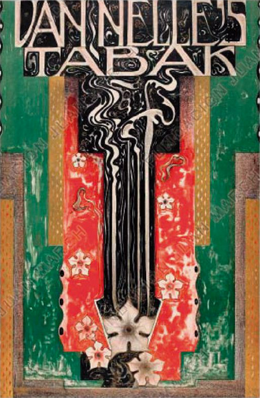

Image from "All of our catalogues collection", catalogue "The Avant-Garde Applied (1890-1950)".

[Courtesy of Fundación Juan March. Used with permission.]

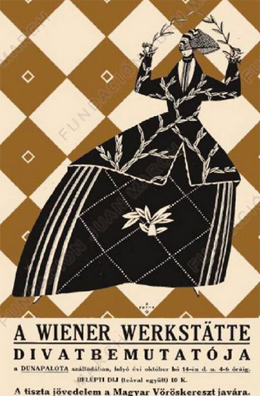

Image from "All of our catalogues collection", catalogue "The Avant-Garde Applied (1890-1950)".

[Courtesy of Fundación Juan March. Used with permission.]

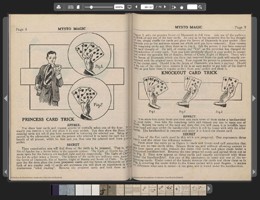

Book viewer multi-device and with progressive loading of pages from the Library of Ilusionism.

[Courtesy of Fundación Juan March. Used with permission.]

The Fundación Juan March (FJM) is a non-profit organization based in Madrid, Spain and devoted to culture and science, dedicating its resources and activities to the fields of Arts, Science and the Humanities. Its digital treasures contain thematic collections, a sound archive of music and words, the editorial production of the Foundation and the research resulting from grants awarded for decades. Moreover, there is an entire body of born digital materials from the events held at the Foundation in video, audio, and image formats. The mission of the FJM's Library is to preserve and disseminate progressively all these materials applying technological advances to improve the use, understanding and visualization of the information.

The digitization began in 2008, an essential step towards preserving the materials and making them available by allowing Internet access to rights-free content. The objective is to foster the study of particular subject domains and in the medium term, achieve cultural and social growth. In November 2011 the knowledge portal on Contemporary Spanish Music "CLAMOR" was launched. This was the first of seven thematic digital collections that have been made available to the public over the past three years. These collections, and the thousands of files freely accessible via the Foundationīs web site, confirm the FJM's priority for the dissemination and access to its specialized content.

A knowledge portal is understood as a means by which interested users can get structured information of comprehensive quality on a topic. This is how CLAMOR was conceived: a digital collection of contemporary Spanish music played in the Foundation since 1975, with the aim of reconstructing the event, respecting the sound of the recording, publishing the program and photographs of the concert, interview or lecture, the biography of the composer and the summary of his or her musical work.

In much the same spirit, other portals were designed like the Linz Archive of the Spanish transition, the archive of Spanish dancer Antonia Mercé "La Argentina", the personal archive of the musical composer Joaquin Turina, and the personal library of writer Julio Cortázar. The portals help users not only to find the content of the archives but to find other materials that support their research in the subject matter.

Other collections aim to facilitate learning and knowledge sharing and make out-of-print publications available; this is the case of the hundred books that form the historic Library of Illusionism "Sim Sala Bim" about magic, and the portal "All our art catalogs since 1973" which contains the full text of more than 180 catalogs of the art exhibition organised by the Foundation.

Islandora, with initial setup was carried out together with the Canadian company DiscoveryGarden Inc, is the technical repository framework chosen to implement the portals. Since its inception it has reached 1 million files stored and preserved and over 11 million RDF relations in between the objects. The FJM digital repository keeps continuously incorporating new interactive and user-friendly features such as data visualizations (maps, timelines), multi-device with progressive loading document viewers (Flexpaper, Internet Archive BookReader), responsive web design and recommendation systems.

Site statistics reveal that the FJM formula has been successful, with a significant increase in web traffic, interlibrary loan requests, image requests for teaching and publication, research, performances and recordings of speeches and articles. More collections with the same philosophy will follow, some already under construction.

D - L I B E D I T O R I A L S T A F F

Laurence Lannom, Editor-in-Chief

Allison Powell, Associate Editor

Catherine Rey, Managing Editor

Bonita Wilson, Contributing Editor

|